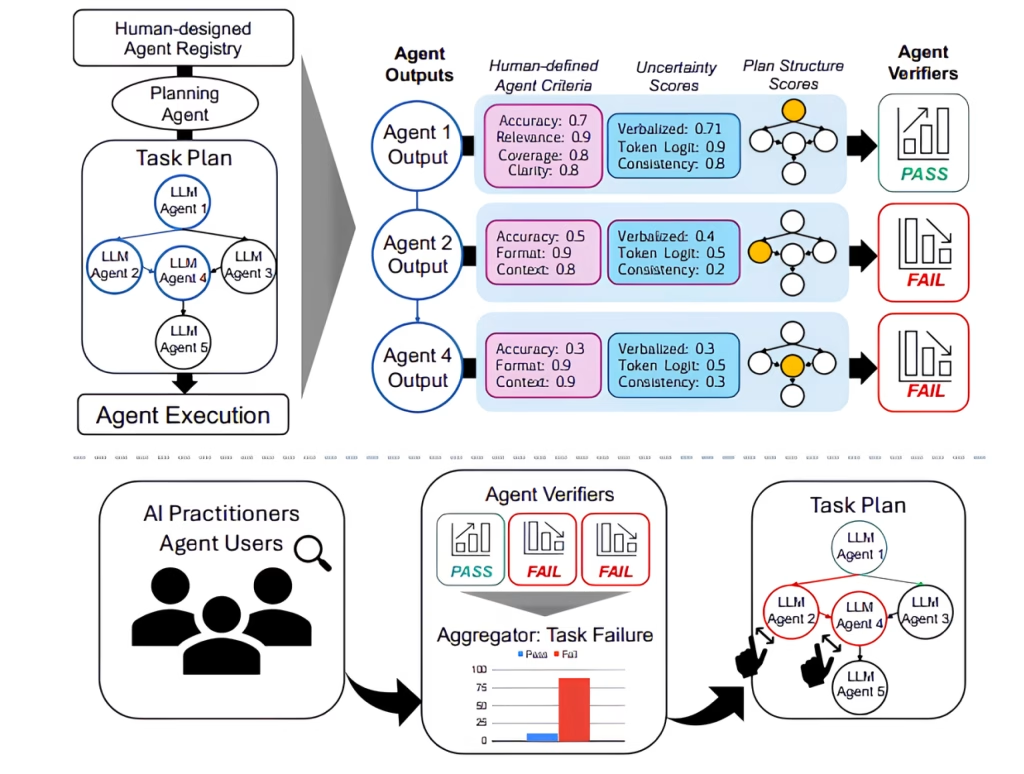

How to Evaluate the Performance of Your AI Agents

In today’s data-driven landscape, AI agents are rapidly advancing from simple automation to complex decision-makers in enterprise environments. Whether you are deploying AI to optimize customer service, streamline internal workflows, or power autonomous systems, evaluating the performance of your AI agents is crucial. An effective evaluation doesn’t just ensure accuracy; it guarantees reliability, fairness, and alignment with your business goals. In this comprehensive guide, we’ll break down a step-by-step approach to evaluate your AI agents—empowering you to build impactful, robust, and efficient AI-powered solutions.

1. Define Clear Objectives and Metrics

Before diving into technical metrics, it’s essential to define what you want your AI agent to accomplish. This clarity forms the foundation for all evaluation processes.

- Objectives: Explicitly outline the expected outcomes and actions of your AI agent. Are you expecting it to respond accurately to customer queries, generate leads, identify anomalies, or automate tedious tasks?

- Metrics: Once objectives are set, translate them into quantifiable measures. These metrics should reflect both the technical performance and business value. Key performance indicators often include:

- Accuracy/Error Rate: Frequency of correct outputs versus mistakes.

- Precision & Recall: Especially vital in classification problems, where precision dictates how many selected items are relevant, while recall measures how many relevant items are selected.

- F1-Score: The harmonic mean of precision and recall, especially useful for imbalanced datasets.

- Completion Rate: Percentage of tasks the agent completes successfully.

- Efficiency: Measures like runtime or computational cost.

- Cost: Dollar value spent on resources or computing to run the agent.

- User Satisfaction & Engagement: Feedback and usage frequency.

- Conversion Rate: For agents with sales or lead-generation goals.

- ROI: The agent’s cost relative to its contribution to business value.

- Adherence to Constraints: Compliance with ethical or operational limits.

- Robustness: The consistency of agent performance across variable scenarios.

- Fairness: Ensuring equitable treatment across demographic groups.

Clearly documented objectives and data-driven metrics form the backbone of a repeatable, transparent evaluation strategy.

2. Establish a Baseline for Comparison

Any meaningful evaluation requires a point of reference—a baseline. Establishing this baseline can involve:

- Historical System Performance: Compare the AI agent against conventional or legacy systems.

- Human Performance: Benchmarks against human accuracy or efficiency provide context for real-world efficacy.

- Rule-based Methods: Serve as a simple, deterministic alternative against which AI’s advantages can be measured.

By setting this comparative reference, organizations can quantitatively demonstrate the enhancements delivered by their AI agents.

3. Choose Comprehensive Evaluation MethodsOffline Evaluation

- Historical Data Analysis: Use existing datasets to simulate the AI agent’s performance in a controlled environment. This enables safe and rapid experimentation.

- A/B Testing: Deploy different versions (agent vs. no-agent or agent v1 vs. agent v2) in parallel to measure which performs better according to key metrics.

- Simulations: Test agent reactions to a variety of synthetic scenarios—especially valuable for edge-case testing.

Online Evaluation

- Real-World Deployment: Roll out the agent in production and observe live data.

- User Feedback: Gather direct input from users to augment technical analysis with qualitative insights.

- Ongoing Monitoring: Use dashboards to continuously track vital performance indicators, enabling proactive response to emerging issues.

Integrating both offline and online evaluation ensures a balanced, accurate picture of agent performance.

4. Data Collection and Preparation

The reliability of your evaluation depends on the quality of your underlying data. Follow these best practices:

- Data Quality: Ensure records are clean, consistent, and representative of future use cases. Inadequate data can lead to skewed or misleading metrics.

- Sufficient Data Volume: Work with large enough datasets to support statistically significant findings and avoid random fluctuations.

- Data Preprocessing: Remove duplicates, fill gaps, and normalize values before model evaluation to ensure fair comparisons.

5. Performance Analysis and Results Interpretation

Turning raw results into insight requires structured analysis:

- Statistical Significance: Utilize statistical hypothesis testing to validate that observed improvements aren’t due to chance.

- Error Analysis: Pinpoint where and why failures occur to fine-tune future iterations.

- Visualization: Leverage charts, graphs, and dashboards to communicate findings clearly to stakeholders.

The result: an evaluation process that not only measures but also explains and optimizes agent behavior.

6. Establish an Iterative Improvement Cycle

Evaluation isn’t a one-time event—it’s an ongoing feedback loop. Here’s how to keep your agent up-to-date and high-performing:

- Continuous Feedback: Use monitoring and user feedback to identify new opportunities for fine-tuning.

- Retraining and Refinement: Incorporate new data or adjust model architectures in response to performance shortcomings.

- Regular Reevaluation: Schedule periodic reviews to ensure your agent adapts to changing data patterns and business priorities.

This cyclical process echoes best-in-class practices seen on leading enterprise ai platform providers.

7. Special Considerations by AI Agent Type

Not all AI agents are alike. The optimal evaluation strategy may differ by agent class:

- Reinforcement Learning Agents: Focus on accumulated rewards, task completion rates, and exploration efficiency across episodes.

- Natural Language Processing Agents: Use domain-specific metrics like BLEU (translation), ROUGE (summarization), or standard classification metrics for chatbots and virtual assistants.

- Computer Vision Agents: Assess performance with metrics such as precision, recall, F1-score, and Intersection over Union (IoU).

For a full primer on types and real-world use cases, explore what is an ai agent.

8. Tools and Frameworks for AI Agent Evaluation

Elevate your evaluation process with specialized tools designed for tracking, visualization, and optimization:

- MLflow: End-to-end machine learning lifecycle management.

- TensorBoard: Visualizes TensorFlow metrics during training and evaluation.

- Weights & Biases / Comet: Experiment tracking platforms for deeper insights into model behavior.

These platforms streamline the collection, visualization, and comparison of results—empowering enterprise teams to build, track, and improve enterprise ai agent deployments at scale.

9. Ensuring Alignment: Ethics, Fairness, and Compliance

Besides performance, modern organizations must ensure their AI agents adhere to ethical, legal, and operational standards.

- Fairness Audits: Measure disparities in agent outputs across diverse population groups.

- Compliance Monitoring: Ensure adherence to regulatory and sector-specific guidelines.

- Transparency: Document all experiments and evaluation methodologies for auditability and trust.

A holistic approach not only optimizes results but also protects brand reputation and stakeholder trust in AI’s role in your enterprise.

10. Key Takeaways: Build Continuous Value with Thoughtful Evaluation

Evaluating AI agents is both a science and an art—blending rigorous metric-driven analysis with human insight, ethical reflection, and strategic alignment. Establish clear objectives, track meaningful metrics, benchmark against robust baselines, and embrace a culture of continuous improvement.

In doing so, organizations unlock the full potential of AI, ensuring their agents are not just smart, but resilient, responsible, and truly transformative.

Frequently Asked Questions

1. What are AI agents?

AI agents are autonomous or semi-autonomous software programs that perceive their environment, make decisions, and take actions to achieve specific goals.

2. Why is it important to evaluate AI agent performance?

Thorough evaluation ensures agents are effective, reliable, cost-efficient, and aligned with business objectives—minimizing risks and maximizing ROI.

3. Which metrics are best for measuring AI agent accuracy?

Common metrics include accuracy, precision, recall, F1-score, and error rate. The best choice depends on the specific task and business needs.

4. What role does user feedback play in AI agent evaluation?

User feedback provides valuable qualitative insights, highlighting areas technical metrics may miss—such as usability and satisfaction.

5. How can I set a benchmark for my AI agent’s performance?

Compare against previous systems, human expertise, or rule-based solutions to establish a meaningful baseline.

6. How often should I reevaluate my AI agents?

Regular reviews—quarterly or after major updates—ensure agents adapt to new data and changing business demands.

7. What tools can help track and visualize AI agent results?

MLflow, TensorBoard, Weights & Biases, and Comet are among the most popular for experiment tracking and result visualization.

8. How do I ensure fairness and avoid bias in my AI agent?

Regularly audit outcomes for demographic biases and ensure diverse, representative training data.

9. Are there unique considerations for enterprise ai agent deployments?

Yes—enterprise deployments often require higher standards for compliance, scalability, and reliability.

10. Where can I learn about leading enterprise ai platform solutions?

Explore platforms like any enterprise ai platform designed for robust large-scale AI development, deployment, and management such as Stack AI.

Unlocking exceptional results from AI agents requires thoughtful evaluation at every stage. By making performance assessment an integral part of your strategy, you pave the way for innovation, agility, and enduring business value.